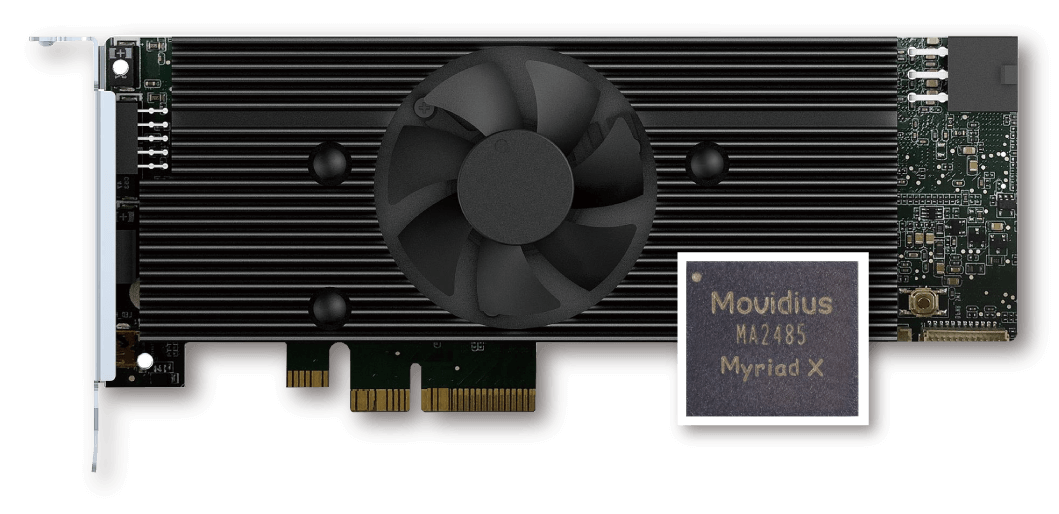

IEI Mustang-V100-MX8 – Vision Accelerator Card

• IEI – Mustang-V100-MX8 – Vision Accelerator Card

• Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe gen2 x4 interface, RoHS

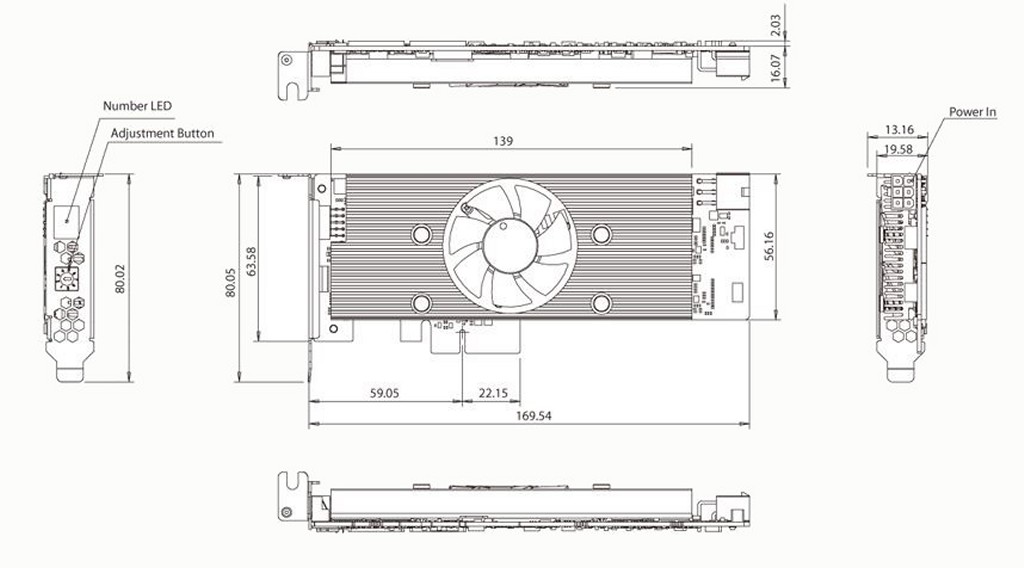

• Half-Height, Half-Length, Single-Slot compact size

• Low power consumption ,approximate 2.5W for each Intel Movidius Myriad X VPU.

• Supported OpenVINO toolkit, AI edge computing ready device

• Eight Intel Movidius Myriad X VPU can execute eight topologies simultaneously.

Description

Mustang-V100-MX8

Accelerate To The Future

Intel Vision Accelerator Design with Intel Movidius VPU.

A Perfect Choice for AI Deep Learning Inference Workloads

Powered by Open Visual Inference & Neural Network Optimization (OpenVINO) toolkit

- Half-Height, Half-Length, Single-Slot compact size

- Low power consumption ,approximate 2.5W for each Intel Movidius Myriad X VPU.

- Supported OpenVINO toolkit, AI edge computing ready device

- Eight Intel Movidius Myriad X VPU can execute eight topologies simultaneously.

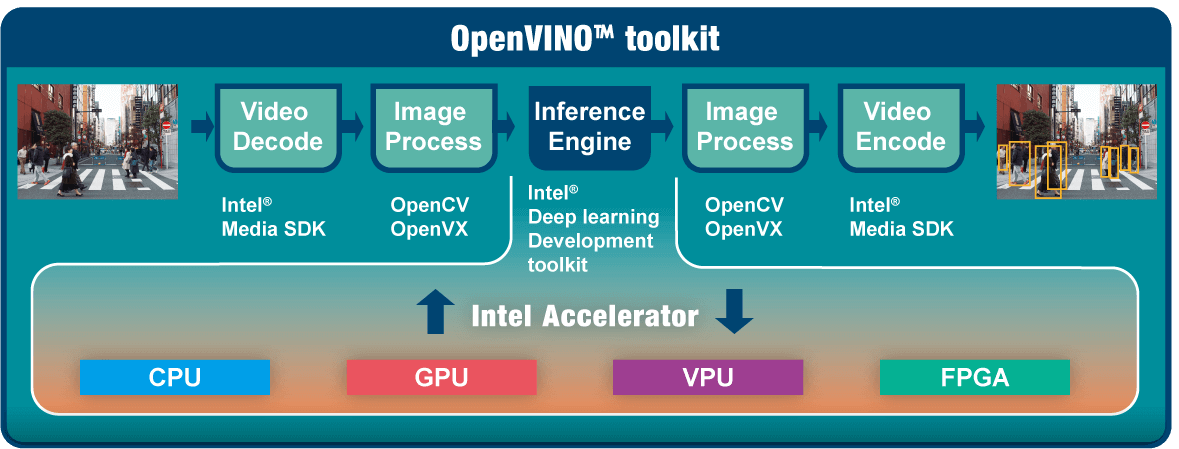

OpenVINO toolkit

OpenVINO toolkit is based on convolutional neural networks (CNN), the toolkit extends workloads across Intel hardware and maximizes performance.

It can optimize pre-trained deep learning model such as Caffe, MXNET, Tensorflow into IR binary file then execute the inference engine across Intel-hardware heterogeneously such as CPU, GPU, Intel Movidius Neural Compute Stick, and FPGA.

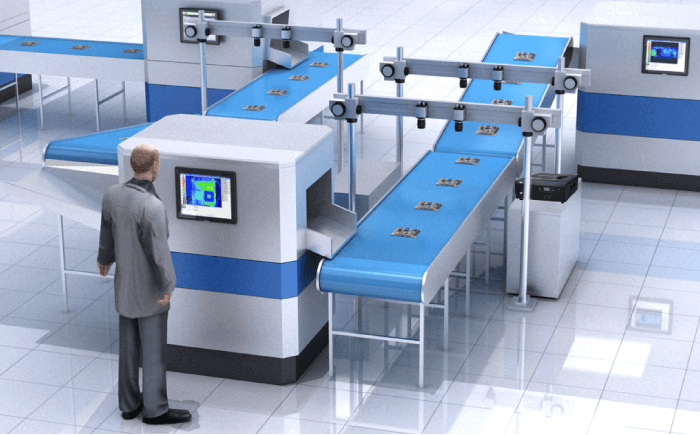

Applications

|

|

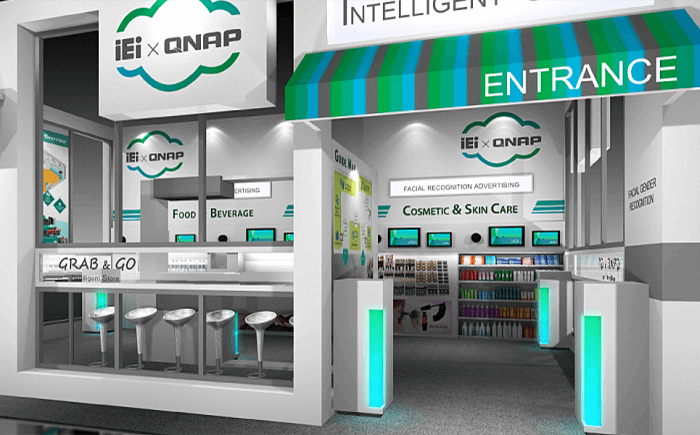

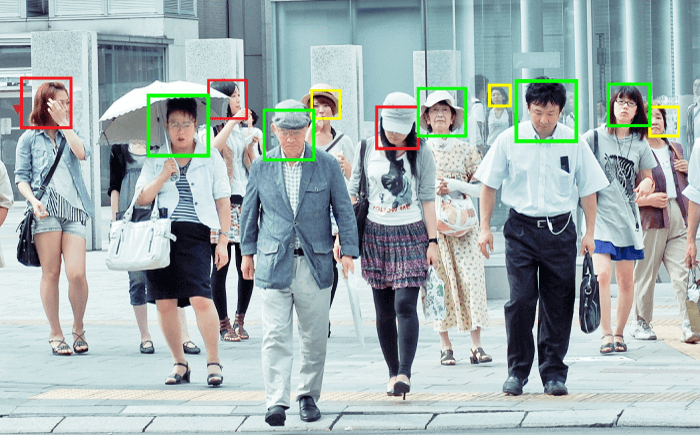

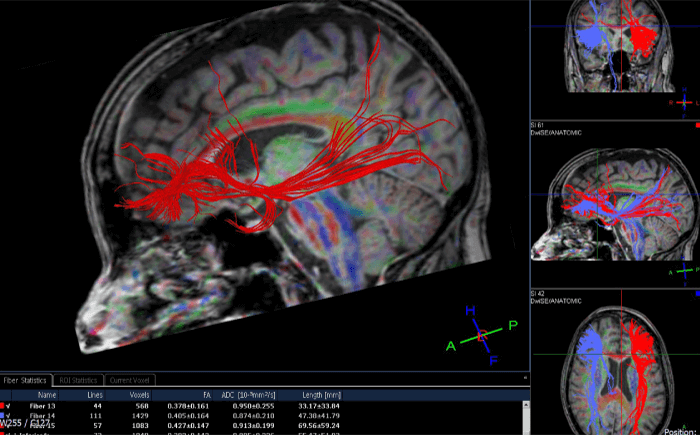

| Machine Vision | Smart Retail |

|

|

| Surveillance | Medical Diagnostics |

Overview

Powered by Open Visual Inference & Neural Network Optimization (OpenVINO) toolkit. Half-Height, Half-Length, Single-Slot compact size. Low power consumption ,approximate 2.5W for each Intel Movidius Myriad X VPU. Supported OpenVINO toolkit, AI edge computing ready device. Eight Intel Movidius Myriad X VPU can execute eight topologies simultaneously.OpenVINO toolkit is based on convolutional neural networks (CNN), the toolkit extends workloads across Intel hardware and maximizes performance. It can optimize pre-trained deep learning model such as Caffe, MXNET, Tensorflow into IR binary file then execute the inference engine across Intel-hardware heterogeneously such as CPU, GPU, Intel Movidius Neural Compute Stick, and FPGA.

Features

- Operating Systems

- Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit (Support Windows 10 in the end of 2018 & more OS are coming soon)

- OpenVINO Toolkit

- Intel Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine

- Optimized computer vision libraries

- Intel Media SDK

- *OpenCL graphics drivers and runtimes.

- Current Supported Topologies: AlexNet, GoogleNet V1, Yolo Tiny V1 & V2, Yolo V2, SSD300, ResNet-18, Faster-RCNN. (more variants are coming soon)

- High flexibility, Mustang-V100-MX8 develop on OpenVINO toolkit structure which allows trained data such as Caffe, TensorFlow, and MXNet to execute on it after convert to optimized IR.

*OpenCL is the trademark of Apple Inc. used by permission by Khronos

Specification

| Model Name | Mustang-V100-MX8 |

| Main Chip | Eight Intel Movidius Myriad X MA2485 VPU |

| Operating Systems | Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit (Support Windows 10 in the end of 2018 & more OS are coming soon) |

| Dataplane Interface | PCI Express x4 |

| Power Consumption | <30W |

| Operating Temperature | 5°C~55°C(ambient temperature) |

| Cooling | Active fan |

| Dimensions | Half-Height, Half-Length, Single-width PCIe |

| Support Topology | AlexNet, GoogleNet V1/V2/V4, Yolo Tiny V1/V2, Yolo V2/V3, SSD300,SSD512, ResNet-18/50/101/152,

DenseNet121/161/169/201, SqueezeNet 1.0/1.1, VGG16/19, MobileNet-SSD, Inception-ResNetv2, Inception-V1/V2/V3/V4,SSD-MobileNet-V2-coco, MobileNet-V1-0.25-128, MobileNet-V1-0.50-160, MobileNet-V1-1.0-224, MobileNet-V1/V2, Faster-RCNN |

| Operating Humidity | 5% ~ 90% |

*Standard PCIe slot provides 75W power, this feature is preserved for user in case of different system configuration

Ordering Information

| Part No. | Description |

| Mustang-V100-MX8-R10 | Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe Gen2 x4 interface, RoHS |

| Mustang-V100-MX4-R10 | Computing Accelerator Card with 4x Intel Movidius Myriad X MA2485 VPU, PCIe Gen 2 x 2 interface, RoHS |

| Mustang-MPCIE-MX1 | Deep learning inference accelerating miniPCIe card with 2 x Intel Movidius Myriad X MA2485 VPU, miniPCIe interface 30mm x 50mm, RoHS |

| Mustang-M2AE-MX1 | Computing Accelerator Card with 1 x Intel Movidius Myriad X MA2485 VPU,M.2 AE key interface, 2230, RoHS |

| Mustang-M2BM-MX2-R10 | Deep learning inference accelerating M.2 BM key card with 2 x Intel Movidius Myriad X MA2485 VPU, M.2 interface 22mm x 80mm, RoHS |

BVM Customisation Service

If you cant find an off the shelf product that meets your specific requirements speak with our in house design team who can customize an existing product or design a new product from start to finish.

If you’re unable to find an off-the-shelf product that meets your specific requirements, don’t hesitate to contact our in-house design team. They possess the expertise to customise an existing product to your exact specifications or embark on a fresh design journey to create a customized solution tailored to your requirements.

Our design professionals are dedicated to delivering exceptional results, ensuring that the final product not only meets but exceeds your expectations. When you collaborate with our team, you open the door to a world of possibilities, where innovation and creativity converge to bring your vision to life.

Whether it’s modifying an existing product or crafting an entirely new one, our design experts are committed to providing you with a comprehensive, end-to-end solution that perfectly suits your needs. Your satisfaction is our top priority, and we’re here to turn your ideas into reality.

Design | Develop | Test | Manufacture

With over three decades of experience in designing custom industrial and embedded computer and display solutions across a wide array of industries, we’re here to turn your ideas into reality

Here’s a selection of our design, manufacturing & associated services: –

Design to Order: OEM/ODM Embedded Product Design ServicesFor customers designing a brand-new product from scratch or working with an existing prototype.

|

Build to Order: Embedded Computer Design and Customisation ServicesTake an existing system and we can:

Build to Order: Racks and Towers, Peli Case PCs and Mini-ITX PCs Embedded Software Services : Configuration, Integration and DeploymentPorting, Integration & Deployment

|

ALL OUR DESIGN AND MANUFACTURING SERVICES INCLUDE:

Testing |

Materials Management |

Logistics and Tracking |

External Manufacturing |

| • Burn in Test • Temp / Thermal Testing • Environmental Testing • Safety Testing • Software Compatibility Test |

• Vendor Selection and Component Procurement • Product Traceability • Obsolescence, End of Life and Last Time Procurement Management • Simple to use on-line RMA System |

• Traceability of Shipments • Product Labelling • OEM/Branded Packaging • System Branding • Custom Labels |

• Surface Mount: – High Speed Placement • Conventional Through Hole Insertion & Assembly • Automated Optical Inspection • Bespoke PCB Test |

Manufacturer : IEI

Card Type

- Card Interface Type : PCIe

- Card Function : Vision Accelerator

- Card Capture Resolution :

- Card Capture FPS :

CPU

- Powered By : Intel

- CPU Model : MA2485 VPU

- CPU Speed :

- CPU Cores :

Memory

- Memory Installed : 8Gb

- Memory Slots :

- Memory Type :

I/O and Expansion

- Expansion Slots :

- LAN Ports :

- Serial Ports :

- USB 2 Ports :

- USB 3 Ports :

- USB 3.X / USB C / USB 4.0 Ports :

- Video Output :

- Multi Display :

Operating System

- OS : Linux, Windows

Certifications

- Certifications :

Categories : AI Accelerator Cards, AI Inference Accelerator Cards, AI Inference Accelerator Cards