Description

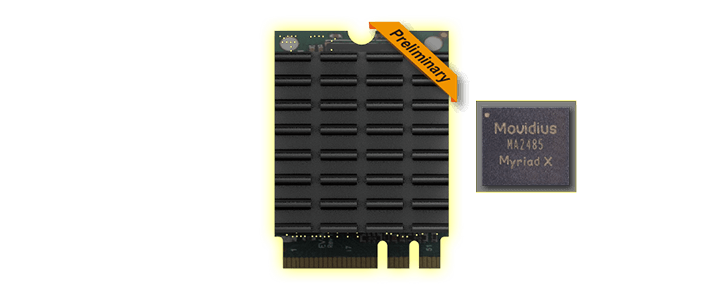

Mustang-M2AE-MX1

Intel Vision Accelerator Design with Intel Movidius VPU

A Perfect Choice for AI Deep Learning Inference Workloads

Powered by Open Visual Inference & Neural Network Optimization (OpenVINO) toolkit

- Compact size M.2 2230 card. (22 x 30mm)

- Low power consumption ,approximate 5W for one Intel Movidius Myriad X VPU.

- Supported OpenVINO toolkit, AI edge computing ready device

Intel® Distribution of OpenVINO™ toolkit

Intel® Distribution of OpenVINO™ toolkit is based on convolutional neural networks (CNN), the toolkit extends workloads across multiple types of Intel® platforms and maximizes performance.

It can optimize pre-trained deep learning models such as Caffe, MXNET, and ONNX Tensorflow. The tool suite includes more than 20 pre-trained models, and supports 100+ public and custom models (includes Caffe*, MXNet, TensorFlow*, ONNX*, Kaldi*) for easier deployments across Intel® silicon products (CPU, GPU/Intel® Processor Graphics, FPGA, VPU).

Features

- Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit, Windows® 10 64bit - OpenVINO™ toolkit

- Intel® Deep Learning Deployment Toolkit

- – Model Optimizer

- – Inference Engine

- Optimized computer vision libraries

- Intel® Media SDK

- Intel® Deep Learning Deployment Toolkit

- Current Supported Topologies: AlexNet, GoogleNetV1/V2, MobileNet SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/101

* For more topologies support information please refer to Intel® OpenVINO™ Toolkit official website. - High flexibility, Mustang-M2AE-MX1 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, MXNet, and ONNX to execute on it after convert to optimized IR.

Applications

|

|

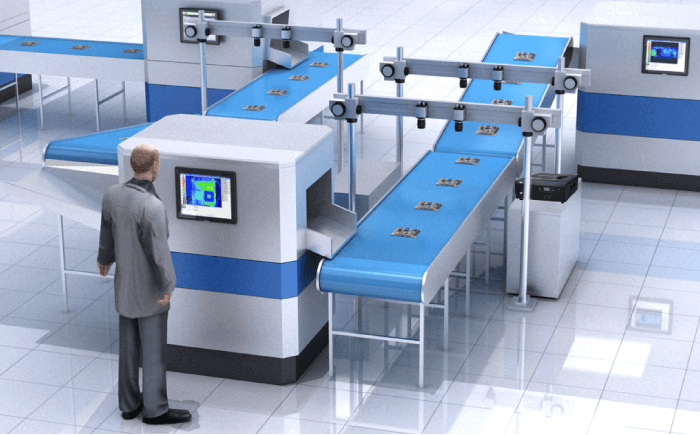

| Machine Vision | Smart Retail |

|

|

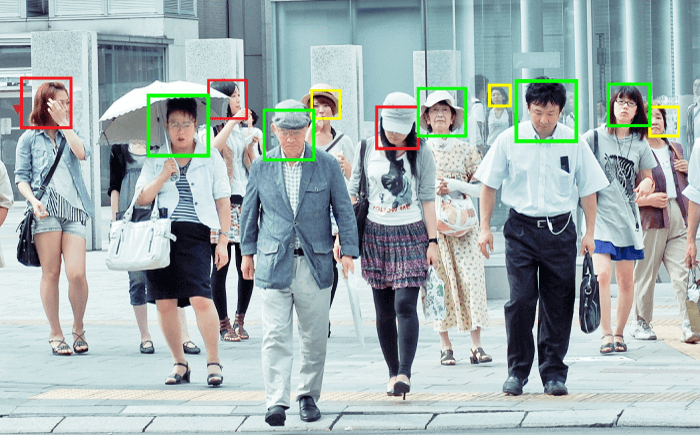

| Surveillance | Medical Diagnostics |

Specification

| Model Name | Mustang-M2AE-MX1 |

| Main Chip | 1 x Intel Movidius Myriad X MA2485 VPU |

| Operating Systems | Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit, Windows 10 64bit |

| Dataplane Interface | M.2 AE Key |

| Power Consumption | Approxinate 5W |

| Operating Temperature | 0°C~55°C (In TANK AIoT Dev. Kit) |

| Cooling | Passive Heatsink |

| Dimensions | 22 x 30 mm |

| Support Topology | AlexNet, GoogleNetV1/V2, Mobile_SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/1015% ~ 90% |

| Operating Humidity | 5% ~ 90% |

*Standard PCIe slot provides 75W power, this feature is preserved for user in case of different system configuration

Ordering Information

| Part No. | Description |

| Mustang-V100-MX8-R10 | Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe Gen2 x4 interface, RoHS |

| Mustang-V100-MX4-R10 | Computing Accelerator Card with 4x Intel Movidius Myriad X MA2485 VPU, PCIe Gen 2 x 2 interface, RoHS |

| Mustang-MPCIE-MX1 | Deep learning inference accelerating miniPCIe card with 2 x Intel Movidius Myriad X MA2485 VPU, miniPCIe interface 30mm x 50mm, RoHS |

| Mustang-M2AE-MX1 | Computing Accelerator Card with 1 x Intel Movidius Myriad X MA2485 VPU,M.2 AE key interface, 2230, RoHS |

| Mustang-M2BM-MX2-R10 | Deep learning inference accelerating M.2 BM key card with 2 x Intel Movidius Myriad X MA2485 VPU, M.2 interface 22mm x 80mm, RoHS |